CSIRO Computing History, Appendix 1: Timeline

Last updated: 30 Apr 2025

Updated peak performance graph, milestones, and added IBM TS1170 drive.

Added virga, Spectra Logic.

Robert C. Bell

previous chapter — Computing History Home — Appendix 2

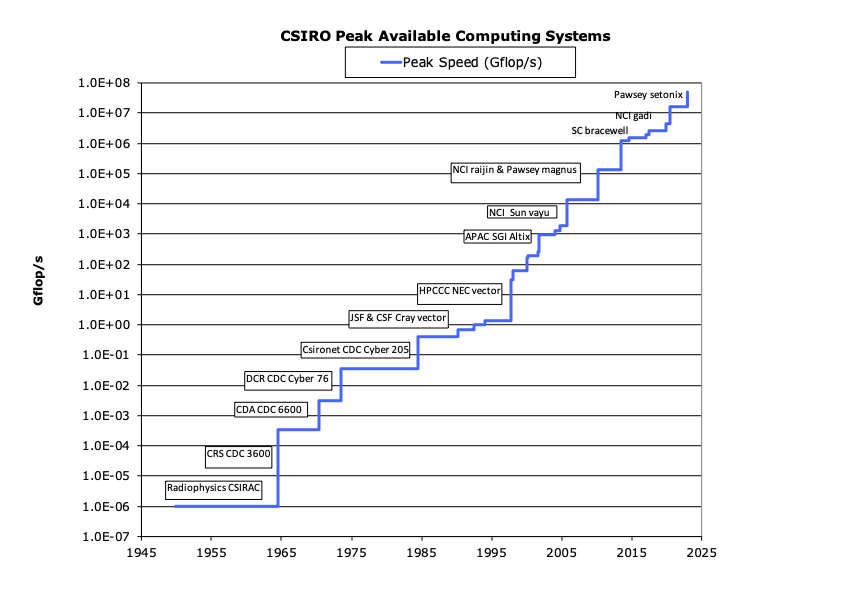

Peak Computer Performance

The above graph shows the peak computing performance of the most capable computer available to CSIRO since CSIR MK 1 in 1949. The performance has increased by nearly 14 orders of magnitude to 2023. Of course, from now on, it is hard to include the ‘cloud’ resources available to CSIRO.

Timeline of CSIRO Computing events

See the book McCann, Doug & Thorne, Peter (2000) for a timeline for CSIR Mk 1 (CSIRAC)

-

1962: A proposal from Trevor Pearcey with the support of E.A. Cornish from DMS for the establishment of a ‘network’ of computing facilities was approved.

-

1963 Jan 01: C.S.I.R.O. Computing Research Section established in Canberra, with Godfrey Lance as OIC.

- 1964: Building (401A) completed at Black Mountain

- 1964: C.S.I.R.O. Computing Network set up with CDC 3600 and three CDC 3200s

- 1966: Introduction of drum storage, display consoles, the CSIRO-developed DAD (Drums and Display) Operating System, and the Document Region – the first on-line digital storage.

- 1967: On-line document storage for extended periods

- 1967: First operational disc storage (CSIRAC did have a small disc).

- 1967 Oct 01: CSIRO Computing Research Section became the Division of Computing Research

- 1968: First DEC PDP

- 1969-70: Wide area network=Csironet started

- 1973 Jun 09: CDC Cyber 76 installed

- 1973 Jul 13: CDC Cyber 76 acceptance tests completed

- 1974-75: COMp80 installed

- 1975-76: Fernbach report

- 1977: CDC 3600 de-commissioned. Peter Claringbold replaces Godfrey Lance as Chief.

- 1977-78: Birch report

- 1978-79: FACOM joint development project commenced. ATL installed.

- 1980-81: VLSI project commenced

- 1981-82: New computer hall in use, micronode development

- 1983-84: TFS in service

- 1984: CDC Cyber 205 in service

- 1985 Nov 29: CDC Cyber 76 de-commissioned

- c. 1986-1987: DIT established, Csironet privatised

- 1989: Supercomputing Facilities Task Force established

- 1990: Cyber 205 de-commissioned. Most CSIRO Divisions ceased using Csironet.

- 1990-1992: Joint Supercomputer Facility; Cray Y-MP2/216 SN 1409 (cherax) at LET in Port Melbourne. Supercomputing Support Group in DIT Carlton.

- 1991 Nov 14: DMF commences on cherax

- 1992-1997: CSIRO Supercomputing Facility; Cray Y-MP4E SN 1918 (cherax II) at the University of Melbourne

- 1993 Jun -1997: CSF STK ACS4400 at the University of Melbourne

-

1997-2004: HPCCC at the Bureau of Meteorology, 150 Lonsdale St Melbourne

-

1997-2000: NEC SX-4 at the Bureau of Meteorology

-

1997-2004: Cray J916se SN 9730? (cherax III) and Data Store at the Bureau of Meteorology

-

1999-2004: NEC SX-5s at the Bureau of Meteorology

-

2003-2010: HPCCC at the Bureau of Meteorology, 700 Collins St Docklands

-

2003-2010: NEC SX-6 at the Bureau of Meteorology

-

2004- : SGI Altix (cherax IV and cherax V) and UV (cherax VI) and Data Store at the Bureau of Meteorology, 700 Collins St Docklands

-

2004-2015: IBM BladeCentre 1350 and iDataplex (burnet and nelson) clusters at the Bureau of Meteorology, 700 Collins St Docklands

-

200?- GPU cluster in Canberra

-

2004-08: High Performance Scientific Computing

-

2006: HPSC moved from management by CMIS to management by IM&T

- 2007: Review of CSIRO ASC

-

2008: HPSC changed name to Advanced Scientific Computing

-

2013 – IM&T Scientific Computing

- 2013 June – NCI raijin in production

-

2014 – Pawsey Centre: Cray XC40 magnus

-

2015 – Pearcey cluster in Canberra

-

2015 Sep – installation of UV3000 in Canberra, to be the replacement cherax (ruby) from 2015 Nov 09.

- 2015 Nov – cut-over from cherax at Docklands to UV3000 ruby at CDC, including Data Store. Data Store uses tape drives at Black Mountain and Clayton, providing full dual-site protection.

- 2016 Sep – Alf Uhlherr retires as Scientific Computing Services manager, and is replaced by John Zic from Data61.

- 2017 – Bracewell replaces bragg as GPU cluster

- 2018 Nov – MAID decommissioned.

- 2019 Nov – NCI gadi initial system in production

- 2020 Jan – NCI gadi full configuration in production

- 2021 Apr – ruby decommissioned – the last host to have the Data Store directly mounted (as /home)

- 2021 Jun – SC petrichor cluster in service

- 2022 May – Pawsey setonix phase 1

- 2022 May – Spectra Logic tape library

- 2023 Dec – Pawsey setonix phase 2

- 2024 Apr – SC virga replaced bracewell

A history and timeline of the systems at ANU (SF, APAC, NCI) can be seen here .

Timeline of storage technologies

The table below provides a tabulation of the generations of storage (mainly magnetic tape) used by CSIRO in its scientific computing services.

It leaves out any consideration of low-end tape products, such as audio cassettes, 8 mm Exabyte, 4 mm DAT, DECtape, QIC, Travan.

| Date [1] | Organisation | Location | Host | Tape drive | Tape Capacity | Speed Mbyte/s | Automation | Software | Relative capacity | Relative speed |

| 1964 | CSIRO CRS | NSW, SA, VIC | CDC 3200 | CDC 603 7tr [3] [2] | 5.0 Mbyte | 0.0834 | – | SCOPE | 1.00 | 1.00 |

| 1964 | CSIRO CRS | ACT | CDC 3600 | CDC 607 7tr [3] [2] | 6.7 Mbyte | 0.12 | – | SCOPE | 1.34 | 1.44 |

| 1966 | CSIRO CRS | ACT | CDC 3600 | Disc and drums | “ | DAD | ||||

| 1968 | CSIRO DCR | ACT | CDC 3600 | Disc, drums and tape | “ | Document Region | ||||

| 1971 | CSIRO DCR | ACT | CDC 3600 | Disc, drums and tape | “ | DR – HSM | ||||

| 1973 | CSIRO DCR | ACT | CDC Cyber 76 | CDC 669 9tr 1600 bpi | 46 Mbyte | 160, 240, 320 | SCOPE 2 | 9.2 | ||

| 1979-1984 | CSIRO DCR | ACT | FACOM | STK 9tr 6250 bpi | 160 Mbyte

|

1.25 | Calcomp/Braegan ATL | TFS | 32.0 | 15 |

| 1990 | JSF | Vic | Cray Y-MP | IBM 3420 [4] | 170 Mbyte | 1.25 | UNICOS | 34.0 | 15 | |

| 1991 | JSF | Vic | Cray Y-MP | STK 3840 | 240 Mbyte | 3 | DMF – HSM | 48.0 | 36 | |

| 1993 | CSF | Vic | Cray Y-MP | STK 3840 | 240 Mbyte | 3 | STK ACS4400 | DMF – HSM | 48.0 | 36.0 |

| 1995 | QSL | Qld | Convex SPP-1600 | STK | STK ACS4400 | Unitree? – HSM | ||||

| 1996 | CSF | Vic | Cray Y-MP | STK 3490 | 500 Mbyte | 4.5 | STK ACS4400 | DMF – HSM | 100 | 54.0 |

| c. 1998 | HPCCC | Vic | Cray J90se | STK SD-3 | 50 Gbyte | 10 | STK ACS4400 | DMF – HSM | 10000 | 120 |

| c. 2000 | HPCCC | Vic | Cray J90se | STK T9840A | 10 Gbyte | 10 | STK ACS4400 | DMF – HSM | 2000 | 120 |

| c. 2000 | HPCCC | Vic | Cray J90se | STK T9940A | 60 Gbyte | 10 | STK ACS4400 | DMF – HSM | 12000 | 120 |

| 2004 | HPCCC | Vic | SGI Altix | STK T9840C | 40 Gbyte | 30 | STK Powderhorn | DMF – HSM | 8000 | 360 |

| 2004 | HPCCC | Vic | SGI Altix | STK T9940B | 200 Gbyte | 30 | STK Powderhorn | DMF – HSM | 40000 | 360 |

| 2008 | HPSC | Vic | SGI Altix | STK T10000A | 500 Gbyte | 120 | STK SL8500 | DMF – HSM | 100000 | 1440 |

| 2008 | HPSC | Vic | SGI Altix | STK T10000B | 1 Tbyte | 120 | STK SL8500 | DMF – HSM | 2000000 | 1440 |

| 2012 | ASC | Vic | SGI Altix | STK T10000C | 5 Tbyte | 240 | STK SL8500 | DMF – HSM | 1000000 | 2880 |

| 2014 | Pawsey | WA | SGI | IBM TS1140 | 4 Tbyte | 250 | SpectraT-finity | DMF- HSM | 800000 | 3000 |

| 2015 | IMT SC | ACT/VIC | SGI UV | STK T10000D | 8.5 Tbyte | 252 | STK SL8500 | DMF – HSM | 1700000 | 3000 |

| 2020 | IMT SC | ACT/VIC | SGI UV | IBM TS1160 [6] | 20 Tbyte | 400 | IBM TS4500 | DMF – HSM | 4000000 | 4800 |

| 2022 | IMT SC | ACT/VIC | HPE | IBM TS1160 [6] | 20 Tbyte | 400 | Spectra Logic | DMF – HSM | 4000000 | 4800 |

Notes and References

[1] Year of first use by CSIRO

[2] The Scientific Computing Network Memorandum no. 1

[3] CRS Newsletter no. 11, Wikipedia https://en.wikipedia.org/wiki/Magnetic_tape_data_storage – assumed tape length of 1200 ft. 256 * 48 /8 bytes/3.31 inch -> 1200*12*256*6/3.31 bytes =

[4] https://en.wikipedia.org/wiki/9_track_tape

[5] https://en.wikipedia.org/wiki/StorageTek_tape_formats

[6] https://en.wikipedia.org/wiki/IBM_3592

[7] CSIRO Scientific Computing Data Store History – visible to CSIRO only – https://confluence.csiro.au/display/SC/SC+Data+Store+History

[8] Wikipedia – Magnetic Tape – https://en.wikipedia.org/wiki/Magnetic_tape

[9] Wikipedia – IBM 3592 – https://en.wikipedia.org/wiki/IBM_3592

In August 2023 IBM announced the TS1170 tape drive with 50TB cartridges.

Storage holdings

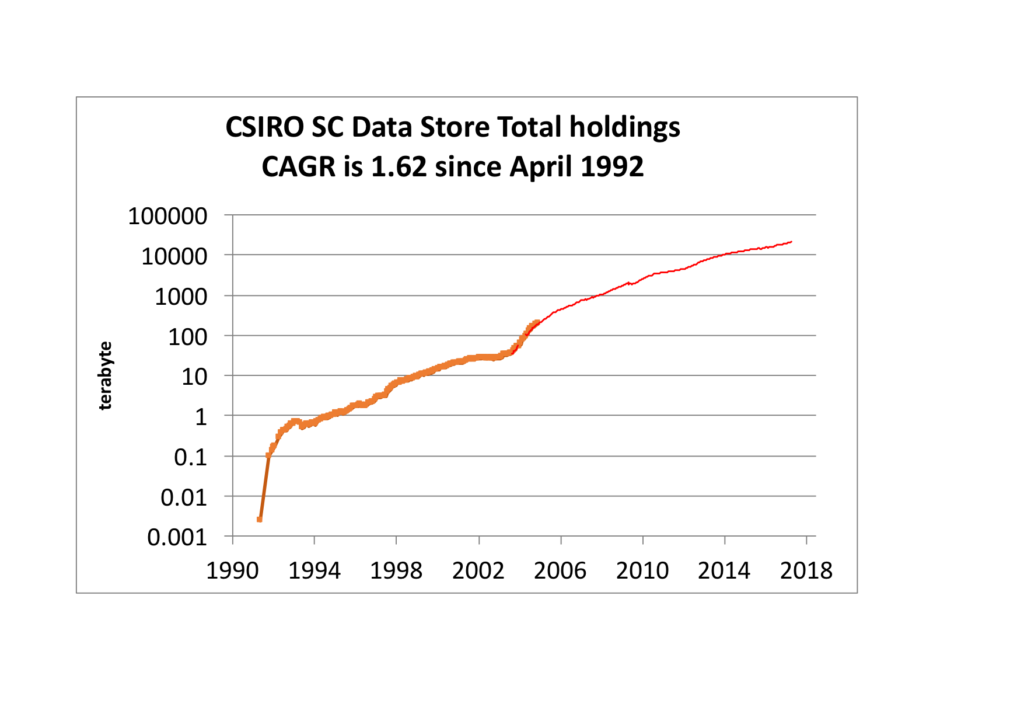

The graph below shows the holdings in the CSIRO Scientific Computing Data Store (and predecessors) from 1992 to 2019. Note the log scale. CAGR is compound annual growth rate – the implication is that holdings have grown by 62% per annum over this period.