CSIRO Computing History, Chapter 7

Chapter 7. The HPCCC – Bureau of Meteorology

Updated: 30 April 2021.

Updated 30 Jan 2023, to add more details on the 2003/2004 Data Store upgrade.

Last updated 07 Aug 2024 to add announcement of HPCCC.

Robert C. Bell

previous chapter — Computing History Home — next chapter

In late 1995, Ed Hayes from Ohio State Supercomputer Center visited Australia and talked about supercomputers. A dinner was arranged with him, attended by senior CSIRO and Bureau of Meteorology staff with interests in supercomputing.

In early 1996, Malcolm McIntosh, the incoming CSIRO CEO, was reported to have said to a CSIRO Chief: “What are we doing about supercomputing: I’m prepared to sign off on a joint facility with the Bureau of Meteorology.”

With that mandate, CSIRO and Bureau staff went to work, and prepared a tender for a new shared supercomputer. Fujitsu, NEC and Cray were the main contenders. The decision was made in April 1997 to award a contract to NEC for an SX-4 system, and to set up a joint High Performance Computing and Communications Centre. (The decision to award the contract to NEC was controversial, in that the selection criteria were not nailed down sufficiently before evaluation commenced. CSIRO had specified that a Fortran 90 compiler was mandatory for its benchmark tests, whereas the Bureau did not. On the CSIRO benchmarks, the Cray T90 results were better than the NEC SX-4 results, but the reverse was the case for the Bureau tests, using the Fortran 77 compliant compiler. As it turned out, the decision to award the contract to NEC was a good one: Cray Research staff later confided that the T90 would not have been a good solution because of reliability problems. Nevertheless, the NEC system proved to have good hardware and poorer software, running SUPER-UX which was even then based on an old version of UNIX. The T90 would have provided the first-class UNICOS operating system, but inferior and more costly hardware.)

Here is a copy of Dr Malcolm McIntosh’s announcement to all CSIRO staff.

The SX-4 arrived in September 1997 and was installed in the Bureau’s Central Computing Facility on the first floor at 150 Lonsdale Street Melbourne. Here are some photos of the arrival. A special cage was built to carry each box, and a crane was required to lift each box over a facade and in through a window on the 1st floor. Cray Research lent NEC a platform used inside to lower each box from window sill height to floor height.

CSIRO acquired a Cray J916se (SN 9730) to manage its storage under DMF and to act as a front-end (with a high-speed 800 Mbit/s HiPPI connection), and also acquired another StorageTek Automated Cartridge System, and transferred its data holdings from the University of Melbourne to the Bureau to be hosted on the ACS and the J916se.

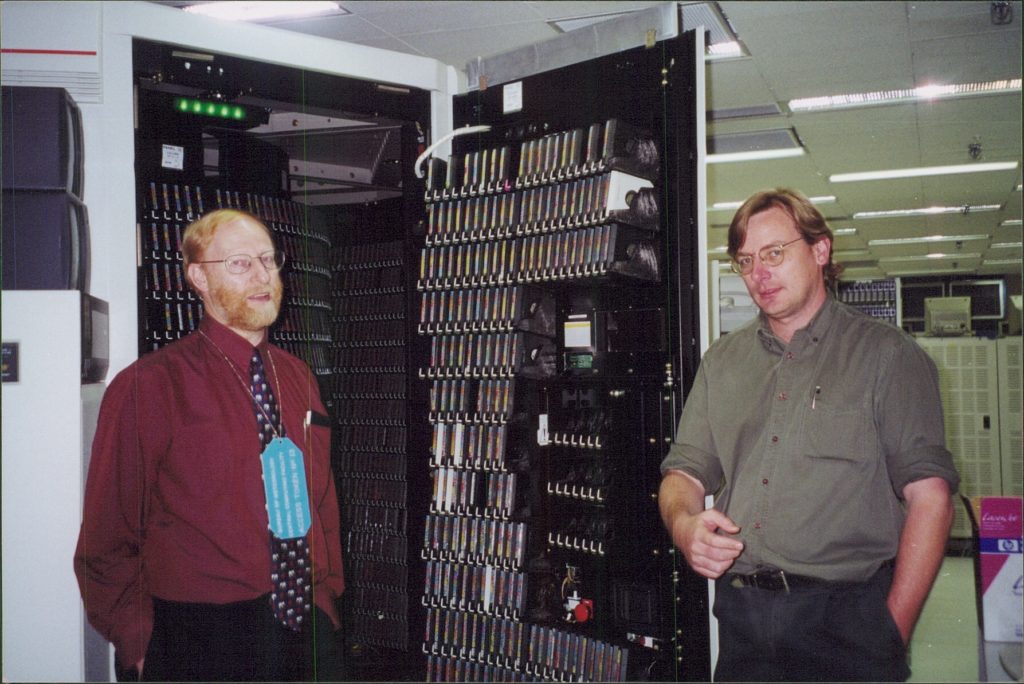

Rob Bell and Jeroen van den Muyzenberg and the STK ACS

There was some contention around the naming of the SX-4 system, as the Bureau wanted to continue its existing naming scheme for the SX-4, whereas CSIRO wanted to recognise that the system was a joint facility – not just a Bureau system. Eventually, agreement was reached to name the system after a famous Australian scientist, and bragg was chosen. This naming tradition has been continued for many subsequent CSIRO Scientific Computing computers.

The four CSIRO staff of the Supercomputing Support Group (Rob Bell, Len Makin, Marek Michalewitz and probably Virginia Norling) moved to the 24th Floor at the Bureau’s headquarters in September 1997 and joined with Bureau staff (Steve Munro, manager, Rob Thurling, Ann Eblen and probably Richard Oxbrow) to establish the HPCCC in newly fitted-out premises. Steve Munro was the first HPCCC Manager, with Rob Bell being Deputy Manager (but not able to act as Manager, since he did not have Bureau delegations, so there was a succession of acting managers – Mike Hassett, Rodney Davidson, Terry Hart, etc).

The HPCCC agreed to the contract with NEC with the proviso that NEC produce a ‘political’ scheduler and a data management solution. NEC wrote the Enhanced Resource Scheduler (ERS) to the HPCCC’s specifications (principally written by Rob Thurling and Rob Bell) by the end of 1997 to do the job, and this was run on the NEC SX systems until their end (and was made into a product by NEC, and was run at several other NEC sites – see NEC’s announcement of the SX-5, which included a reference to ERS – http://www.nec.co.jp/press/en/9806/0401.html ). ERS was initially called the Advanced Resource Scheduler [ARS] until John O’Callaghan pointed out to a senior NEC manager which part of anatomy this referred to! ERS provided pre-emption of jobs, so that when a high-priority job arrived and the system did not have the resources to run it (e.g. CPUs), lower priority jobs were held and check-pointed to free up sufficient resources. NEC enhanced ERS for the multi-node SX-6 systems, and additionally provided the capability of migrating check-pointed jobs from one node to another. The HPCCC staff, and CSIRO staff in particular provided much guidance to NEC so that ERS evolved rapidly to meet on-going needs, such as the 50:50 ratio of usage between the Bureau and CSIRO.

Less successful was NEC’s data management solution, SX-Backstore. Again, HPCCC staff wrote specifications (principally written by Rob Thurling and Rob Bell) for an HSM, and NEC enhanced its very basic product. Testing was done at the HPCCC, and one test was to check for recoverability. The test was to create a file system loaded with about 30,000 files, migrate the files, make dumps of the remaining data on the filesystem (took 6 minutes to disc and 7 minutes to tape), and wipe the entire contents of the file system. The next task was to restore the file system from the dumps, which was found to take 46 hours: completely unacceptable (there was clearly an n-squared component to the restore procedure, where n is the number of files). NEC reported that to fix the problem would require a re-write. This was the end of the HPCCC’s efforts to support NEC’s SX-Backstore development, and some compensation was negotiated with NEC. CSIRO continued with its DMF solution, while the Bureau (having run EPOCH and then SAM-FS, and DMF on a Cray), abandoned DMF and concentrated on SAM-FS – run as an archive facility, not an HSM; whereas CSIRO ran DMF on the J90, giving users direct access to the migrating filesystem.

In early 1998, the SX-4 was upgraded to its full 32 CPUs.

In 1999, after a group from the HPCCC Japan for evaluation, an SX-5 was acquired (florey), and in 2000, a second SX-5 (russell) to replace the SX-4. The second SX-5 was specifically targetted to provide additional capacity and resiliency for the forecasting for the Sydney 2000 Olympics.

In a Bureau publication to mark its 50 years of computing, a photo like the following was included, with the caption “Symbols of cooperation: Robert Bell of CSIRO with the jointly operated NEC SX-5.”

Eva Hatzi joined the HPCCC for about a year in 1999-2000, followed by Jeroen van den Muyzenberg who took over the J916se and Data Store management from Virginia Norling. Bob Smart joined the HPCCC from CSIRO DIT in 2000. Bob concentrated on networking issues, and the building up of services for the future, particularly Linux-based services. He arranged and managed the first cluster, the Beehive (numbered b1 to b6), with 6 Dell blades, which provided front-end services for users, and web and other services. The entry into Linux became an important part of the transition of most scientific computing and storage to Linux-based hosts. Here’s a picture of the Beehive in August 2003.

In 2002-3, the HPCCC tendered again, and contracted with NEC to acquire an SX-6/TX7 system to be situated in Docklands at the Bureau’s new headquarters at 700 Collins Street. The initial system was delivered in December 2003 into an unfinished building, and staff moved to Docklands in August 2004. CSIRO had made the decision not to continue with a 50:50 partnership with the Bureau in the SX-6 system, but had a 25% share – 5 out of 28 nodes, plus one of two TX7 front-ends (eccles and mawson). CSIRO’s decision was to channel the saved funds into systems more tailored to CSIRO’s needs.

At here is an un-edited video from Bob Smart showing the partially-complete building at 700 Collins Street and the installation of equipment in the Central Computing Facility on level 11. Featured are Bob, Richard Oxbrow (BoM), Colin Paisley (NEC) and an unnamed NEC Japan engineer.

Phil Tannenbaum, formerly with NEC in the USA, become HPCCC Manager in about 2002. In drawing up the requirements for the next system, I devised an i/o benchmark (clog) and a workload benchmark. The workload benchmark was designed to evaluate the tendered systems for maximum utilisation. The system had to run a large load of low-priority jobs, and then to simulate the arrival of operational jobs, had to pre-empt those low-priority jobs to allow the operational jobs to access all their required resources promptly, and then when the operational jobs had finished had to resume the running of the low-priority jobs. On the day NEC submitted its tender, one of the staff muttered the word ‘sadist’ to me! In the end, only NEC was able to run the workload benchmark and demonstrate that its systems could handle such a workload. A team visited NEC in May 2003 for a live test demonstration (LTD), which NEC successfully ran. Current HPC and batch systems seem not able to provide this functionality successfully, as shown by the failure of NCI since 2013 and the Bureau from 2017 to 2019 to provide such a service.

Here is a photograph of HPCCC and NEC staff about to start the LTD. at NEC in Japan.

From L to R: Phil Tannenbaum (HPCCC), Dave Parkes, Chris Collins, Ed Habjan, Joerg Henrichs, Stephen Leak, Aaron McDonough (all NEC), Ann Eblen (HPCCC), Les Logan (Bureau R&D). Missing from the photo are Rob Bell (HPCCC) and Ilia Bermous (Bureau R&D).

Here are some pictures showing the build and installation of the NEC SX-6, the STK tape library and the SGI Altix in the Central Computing Facility, level 5, 700 Collins Street Docklands Victoria.

The first shows the one of the two rows of SX-6 cabinets – there were 18 nodes initially, then later 28. The cables are for the cross-bar switch – 8 Gbyte/s to each node, far ahead of any commodity inter-connect at the time.

The second shows the switch cabinets, with masses of cables – watching are Richard Oxbrow from the HPCCC/Bureau, and Rhys Francis from CSIRO HPSC.

The next shows Erika Stojanovic (CSIRO HPSC) highlighting one of the panels of the SX-6 – each had an individual design, reminiscent of chaos or partly-stirred paint.

Here’s the SGI Altix 3700, with staff from SGI – Peter McGonigal, Dale Brantly (who travelled from the USA to oversee the transition of the data holdings from the Cray Research J916se to the Altix), and Nick Gorga.

And, here is a tape library waiting to be assembled.

CSIRO has received superb support from its vendors and staff through the years – in my time most notably from Cray Research, StorageTek, NEC, SGI and IBM. Here is a picture of the NEC Australia staff at the SX-5 decommissioning in 2004: L to R: Colin Paisley, Joerg Henrichs, Jack Dutkiewicz, Stephen Leak, Hiroko Telford, Aaron McDonough, Ed Habjan and Paul Ryan. In the shadows are Peter Gigliotti (Bureau) and Rhys Francis (CSIRO).

When the HPCCC selected the SX-5 (instead of another SX-4), NEC adapted the design so that the central core of the system would fit in the goods lift at 150 Lonsdale Street. When the SX-5s were decommissioned, the NEC engineers had the job of pulling the machines apart. At this stage, the extent of the internal wiring became evident. We estimated that with 16 CPUs and 16384 memory banks, there had to be at least 16 x 16384 = 262144 wires: hundreds of kilometres! Here is a picture of Jack and Colin dismantling an SX-5.

In 2003/2004, CSIRO went to tender for a system to manage the data holdings which were then managed by DMF under UNICOS on the Cray J90. The tender called for a new system, and the transfer of the data. NEC, Cray Research and Silicon Graphics were the main contenders. NEC proposed Legato’s Diskextender, and Cray proposed StoreNext. Unfortunately, for the volumes of data CSIRO had then and envisaged in the near future, both the Cray and NEC proposals included licence costs directly proportional to the quantity of data stored, and these costs were greater than the tape media costs. SGI won with DMF running on an IRIX system, but CSIRO chose to go with an Intel-based system running Linux, the Altix 3700, which was installed at Docklands, along with a new STK Powderhorn tape library (6000-slot capacity). With the released funds, CSIRO acquired an IBM Bladecentre cluster (burnet), and upgraded the Altix to 64 processors (still called cherax). As well, CSIRO acquired another BladeCenter cluster, nelson, to be used as a development platform for the ROAM application for the BlueLink project: a partnership of the Bureau, CSIRO Marine and Atmospheric Research, and the Royal Australian Navy.

Here is a picture of the entrance to the HPCCC offices on the 24th Floor of 150 Lonsdale, in December 2003.

Here’s a picture on the new HPCCC office area on level 11, 700 Collins St on the day of arrival in August 2004.

Here are pictures of the Bureau’s new Head Office building, and views of the surrounding Docklands area (including the Southern Cross Station under construction) in August 2004.

Here is a picture of the rear of the racks of the CSIRO IBM Bladecenter clusters – burnet and nelson in March 2005.

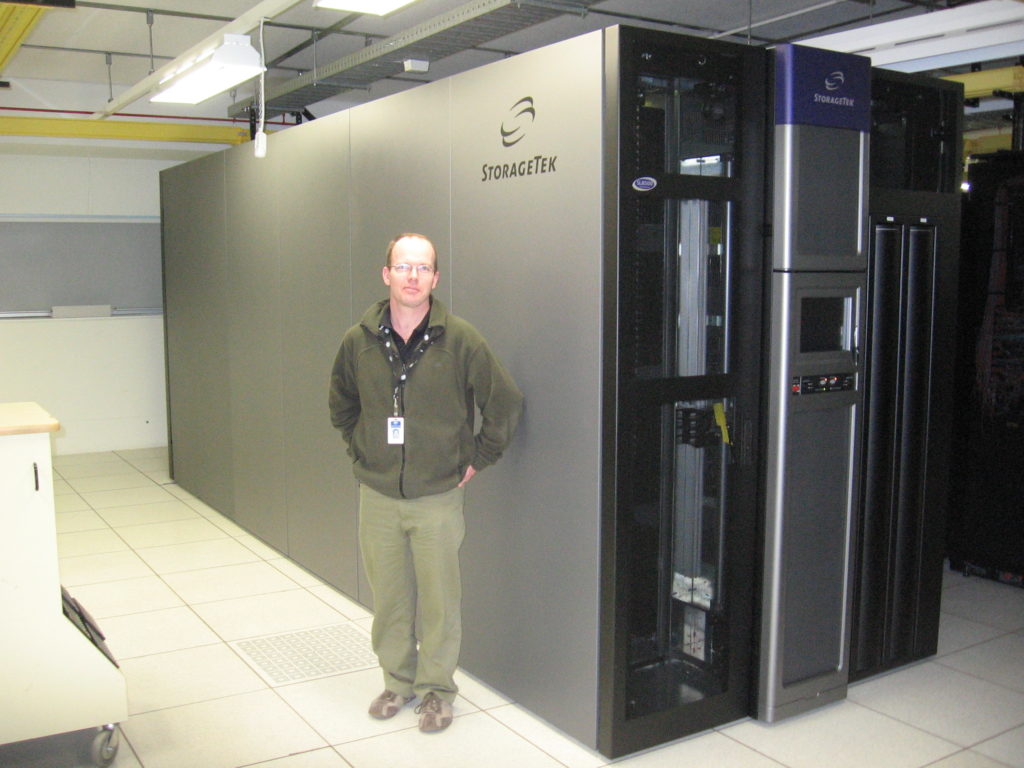

Below is a picture of CSIRO’s STK Powderhorn tape libraries at Docklands in June 2009 just prior to de-commissioning in favour of the STK SL8500 tape libraries.

Here’s Gareth Williams with CSIRO’s SL8500 tape library in August 2009.

Here’s a view of the inside of the SL8500.

In April 2008, an RFT was issued for new supercomputer systems for the Bureau and ANU – the Bureau machine was to be purely for Bureau Operational use, and CSIRO and the Bureau were to have access to a new system at ANU for research computation under the NCI agreement. The contract was awarded to Sun Microsystems, close to the time when it was being acquired by Oracle, which later caused some difficulties!

CSIRO ceased using the NEC SX-6/TX7 system in December 2009, with the system finally being shutdown in August 2010. CSIRO continued to have staff and associated systems at Docklands until July 2015, when most staff moved to Clayton (one left to avoid moving to Clayton, with one staff member being made redundant). The final general CSIRO system at Docklands was an SGI UV1000 cherax, which was replaced by ruby in Canberra in November 2015. This last cherax was removed on 16th December 2015. The final CSIRO-accessible system, ROAM, was removed in December 2018.

(For CSIRO staff, some history of cherax and the Data Store from 1990 can be seen here, including lists of the 5 sites, the 5 principal systems administrators, the 8 hosts and the 12 tape technologies.)

Thus ended the HPCCC, although Rob Bell remained at the Bureau on part-time secondment until his retirement in March 2019.

One of the Cray Research staff remarked that a Bureau staff member described the HPCCC as a once-in-a-lifetime chance for the Bureau to spend other people’s money (i.e. CSIRO’s)!

See also the Applications Software sidebar.