CSIRO Computing History, Chapter 9, CSIRO ASC, and Pawsey Supercomputing Centre

Chapter 9. CSIRO ASC, and Pawsey Supercomputing Centre

Last updated: 29 March 2021.

Robert C. Bell

previous chapter — Computing History Home — next chapter

Of the 25 recommendations, 18 have now been addressed in full. Of these, two (#2 and #24) were one-off implementations, while the other 16 require ongoing commitment. The remaining seven recommendations (#3, #6, #16, #18, #22, #23, #25) have all been at least partially addressed, with clear paths of progression and responsibility.”

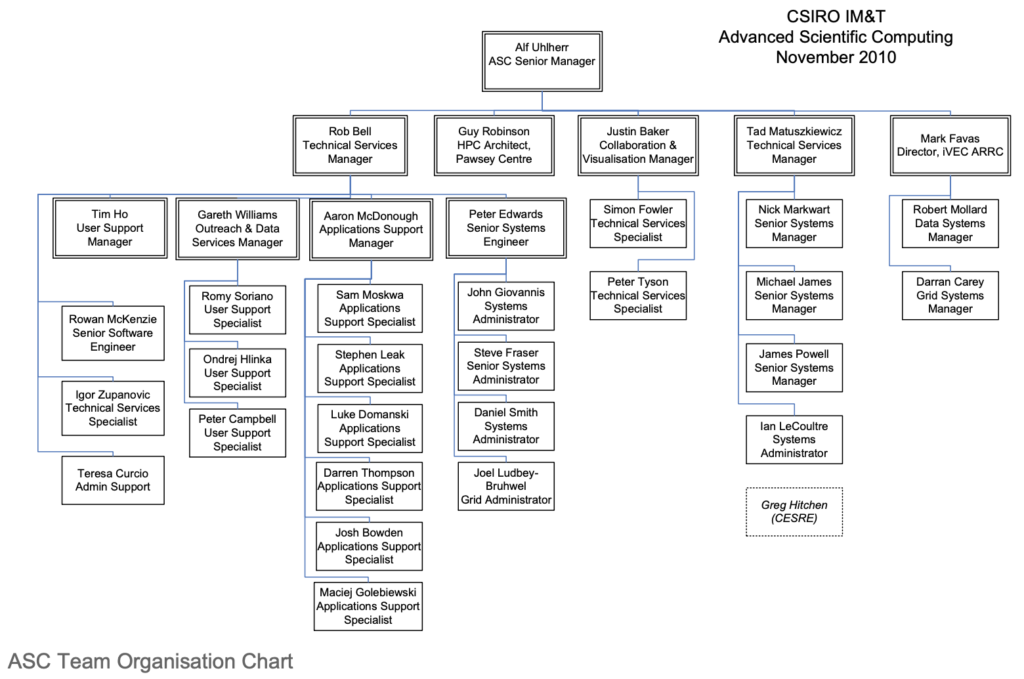

Staff were based at Docklands, Clayton, Canberra, Perth, Hobart and Geelong.

By 2010, a hybrid GPU cluster had been added in Canberra, and CSIRO had ceased using the HPCCC NEC SX-6/TX7 system.

An experimental Storage and Cloud service (stacc) was commenced by 2013, and formally launched in July 2014 as a supported service, named bowen (following a suggestion from Alf Uhlherr and myself) after E. G. (“Taffy”) Bowen, former Chief of CSIRO Radiophysics, and an early proponent of cloud seeding.

In October 2013 it was announced that ASC was to merge into a second branch of IM&T – Scientific Computing group, with sub-groups Infrastructure (Brian Davis) and Services (Alf Uhlherr). The infrastructure group would manage the ASC systems and also CSIRO Corporate servers and storage.

Pawsey Supercomputing Centre

See history.

In the year 2000, an unincorporated joint venture with key partners was established and named iVEC.

In 2009, as part of the Commonwealth Government’s Super Science initiative, iVEC secured $80 million in funding to establish a petascale supercomputing facility and house the computers under one roof, at the Australian Resources Research Centre in Perth.

In 2014, iVEC proudly adopted its new name, as the Pawsey Supercomputing Centre, in honour of the prominent Australian radio astronomer, Joseph Pawsey.

WA universities and CSIRO were the partners in iVEC and Pawsey, with CSIRO taking over management in about 2014. The Pawsey Centre had a purpose-built building at ARRC, and acquired two Cray XC-30/XC-40 systems in 2013-14, one dedicated to radioastronomy data acquisition (galaxy), and one for general HPC (magnus). It also acquired a clustered SGI system, driving a DMF-managed data store, using a SpectraLogic T-finity tape library with IBM TS series drives.